When we derived our formula for the coefficients of regression, we minimised the sum of the squares of the difference between our predicted values for \(Y\) and the true values of \(Y\). This technique is called ordinarly least squares. It is sometimes characterised as the best unbiased linear estimator (aka BLUE), what exactly does this mean?

First, let’s think about regression again, suppose we have \(m\) samples of a set of \(n\) predictors \(X_i\) and a response \(Y\). We can say that the predictors and response are observable, because we have real measurements of their values. We hypothesise that there is an underlying linear relationship of the form

\[

Y_j = \sum_{i = 1}^n X_{i,j} \beta_i + \epsilon_j

\]

where the \(\epsilon\) represent noise or error terms. We can write this in matrix form as

\[

y^T = X \beta + \epsilon

\]

Since they are supposed to be noise , let’s also assume that the \(\epsilon\) terms have expectation zero, that they all have the same fine standard deviation \(\sigma\) and that they are uncorrelated.

This is quite a strong set of assumptions, in particular we are assuming that there is a real linear relationship between our predictors and response that is constant over all our samples, and that everything else is just uncorrelated random noise. (We’re also going to quietly assume that our estimators are independent, and so \(X\) has full rank.)

This can be a little uninuitive, because normally we think about regression as knowing our predictors and using those to estimate our response. Now we imagine that we know a set of values for our predictors and response and using those to estimate the underlying linear relationship between them. More concretely vector \(\beta\) is unobservable, as we cannot measure it directly and when we “do” a regression, we are estimating this value. The ordinary least squares estimate of \(\beta\) is given by

\[

\hat{\beta} = (X^TX)^{-1}X^Ty

\]

Usually we don’t differentiate between \(\hat{\beta}\) and \(\beta\).

OLS is linear and Unbiased

First of all, what makes an estimator linear? Well, an estimator is linear, if it it is a linear combination \(y\). Or equivalently, it is a matrix multiplication of \(y\), which we can see is true of \(\hat{\beta}\).

Now, what is bias? An estimator is unbiased when the expected value of the estimator is equal to the underlying value. We can see that this is true for our ordinary least squares estimate by using our formula for \(Y\) and the linearity of expectation,

\[

\mathbb{E}(\hat{\beta}) = \mathbb{E}((X^TX)^{-1}X^TY) = \mathbb{E}((X^TX)^{-1}X^TX \beta) + \mathbb{E}((X^TX)^{-1}X^T \epsilon)

\]

and then remembering that the expectation of the \(\epsilon\) is zero, which gives

\[

\mathbb{E}(\hat{\beta})= \mathbb{E}((X^TX)^{-1}X^TX \beta) = \mathbb{E}(\beta) = \beta

\]

So, now we know that, \(\hat{\beta}\) is an unbiased linear estimator of \(\beta\).

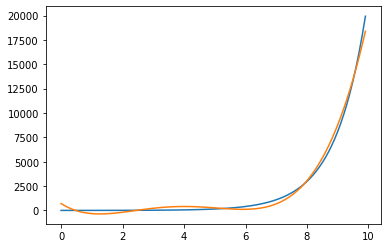

OLS is Best

How do we compare different unbiased linear estimators of \(\beta\)? Well, all unbiased estimators will have the same expectation, so the one with the lowest variance should be best, in some way. It is important conceptually to understand that we are thinking about the variance of an estimator of \(\beta\), so how far away does it usually get from \(\beta\).

Now, \(\beta\) is a vector, so there is not a single number representing it’s variance. We have to look at the whole covariance matrix, not just a single variance term. We say that the variance of the estimator \(\gamma\) is lower than \(\gamma^{\prime}\) if the matrix

\[

\text{Var}(\gamma^{\prime}) – \text{Var}(\gamma)

\]

is positive semidefinite. Or equivalently (by the definition of positive semi definite), if for all vectors c we have

\[

\text{Var}(c^T\gamma) <= \text{Var}(c^T\gamma^{\prime})

\]

First, let’s derive the covariance matrix for our estimator \(\hat{\beta}\). We have

\[

\text{Var}(\hat{\beta}) = \text{Var}((X^TX)^{-1}X^TY) = \text{Var}((X^TX)^{-1}X^T(X\beta + \epsilon))

\]

By the normal properties of variance and because the first terms are constant and add no covariance terms, this is equal to

\[

\text{Var}((X^TX)^{-1}X^T\epsilon) = ((X^TX)^{-1}X^T) \text{Var}(\epsilon) ((X^TX)^{-1}X^T)^T

\]

which equals,

\[

\sigma^2 (X^TX)^{-1}X^TX (X^TX)^{-1} = \sigma^2 (X^TX)^{-1}

\]

Now, let’s compare \(\hat{\beta}\) to an arbitrary unbiased linear estimator. That is, suppose we are estimating \(\beta\) with some other linear combination of \(Y\), given by \(Cy\), for some matrix \(C\), with

\[

\mathbb{E}(Cy) = \beta

\]

Now, let’s look at the covariance matrix of \(Cy\). We are going to use a trick here, first we define the matrix \(D\) as \(C – (X^TX)^{-1}X^T\), then we have

\[

Cy = Dy + (X^TX)^{-1}X^Ty = Dy + \hat{\beta}

\]

Now, if we take the expectation of this, we have

\[

\mathbb{E}(Cy) = \mathbb{E}(Dy + \hat{\beta}) = \mathbb{E}(Dy) + \beta

\]

and expanding that expectation, we have

\[

\mathbb{E}(Dy) = \mathbb{E}(DX\beta + \epsilon) = DX\beta

\]

putting this all together we have,

\[

DX\beta + \beta = \mathbb{E}(Cy) = \beta

\]

So, that gives us, DX=0, which might not seem very helpful right now, but let’s look at the covariance matrix of Cy.

\[

\text{Var}(Cy) = C \text{Var}(y) C^T = C \text{Var}(X\beta + \epsilon) C^T

\]

now, by our assumptions about \epsilon, and as X and \beta our constants, so they have no variance, this is equal to

\[

\sigma^2 C C^T = \sigma^2(D + (X^TX)^{-1}X^T)(D +(X^TX)^{-1}X^T)^T

\]

distributing the transpose this is

\[

\sigma^2 (D + (X^TX)^{-1}X^T) (D^T + X(X^TX)^{-1})

\]

writing this out in full we have

\[

\sigma^2(DD^T + DX(X^TX)^{-1} + (X^TX)^{-1}X^TD^T + (X^TX)^{-1}X^TX(X^TX)^{-1})

\]

using our above result for \(DX\), and cancelling out some of the \(X\), we get

\[

\sigma^2 D^T D + \sigma^2(X^TX)^{-1} = \sigma^2 D^T D + \text{Var}(\hat{\beta})

\]

We can rearrange the above as

\[

\text{Var}(Cy) – \text{Var}(\hat{\beta}) = \sigma^2 DD^T

\]

and a matix of the form \(DD^T\) is always positive semidefinite. So we have shown that the variance of our arbitrary unbiased linear operator is at least as great as that of \(\hat{\beta}\).

So, the result of all this is that we have a pretty good theoretical justification for using OLS in regression! However, it does not mean that OLS is always the right choice. In some ways, an unbiased estimator is the correct estimator for \(\beta\), but sometimes there are other things we are considering, and we are actually quite happy with bias! We will see such estimators when we look at feature selection.